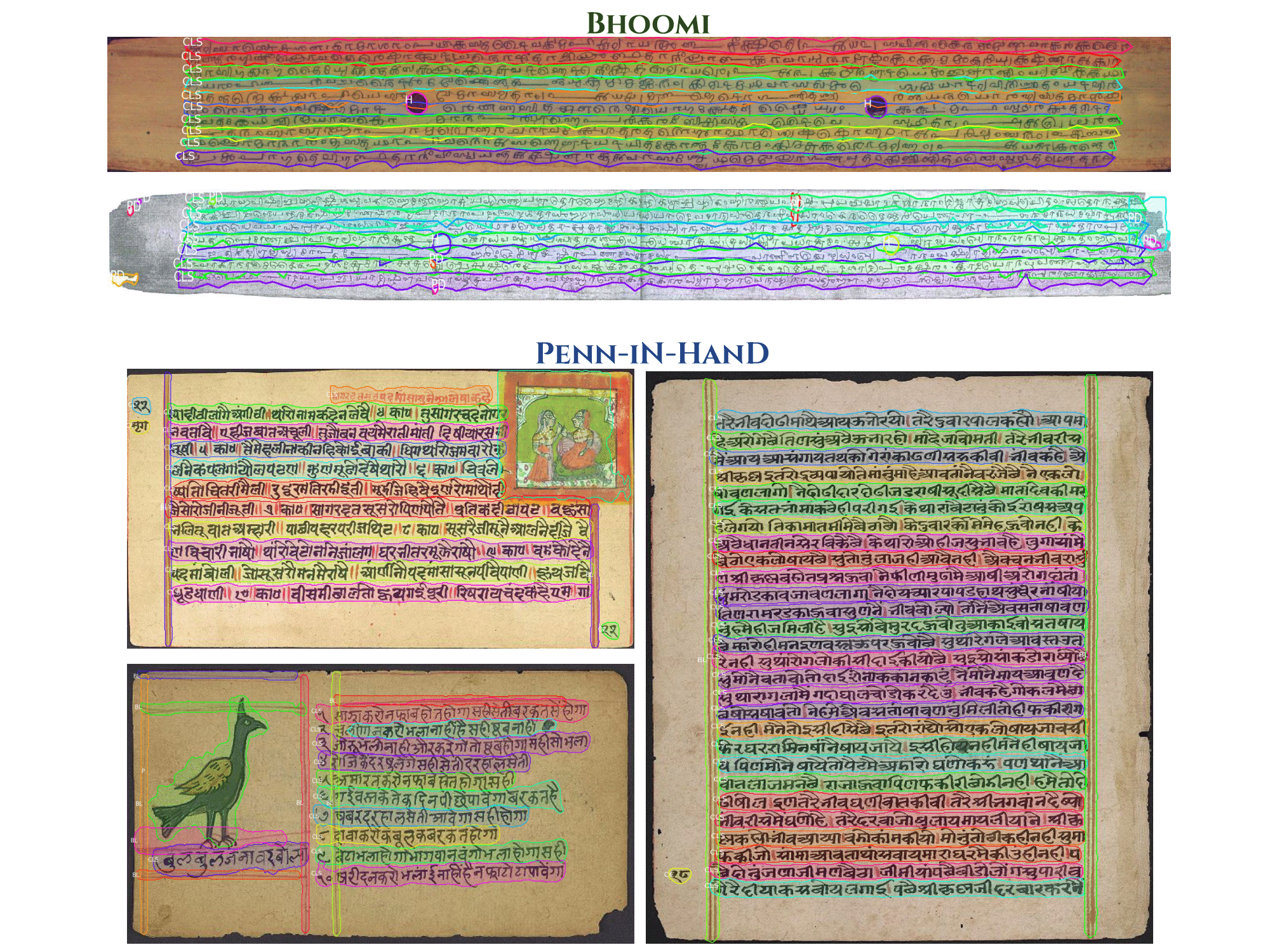

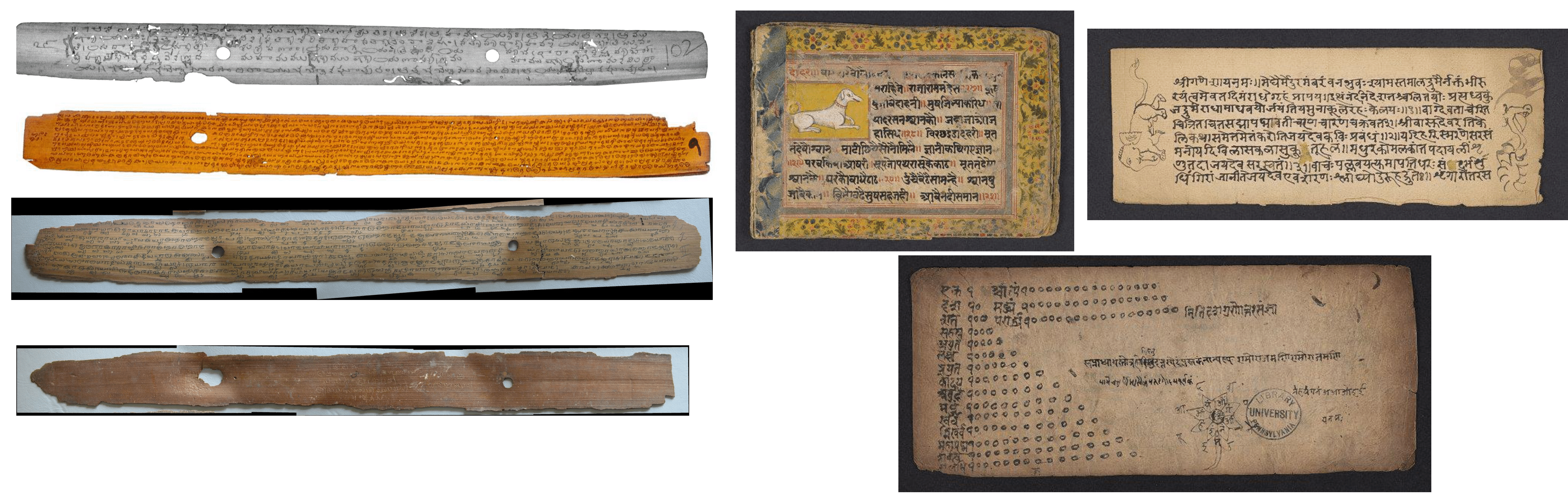

BoundaryNet - An Attentive Deep Network with Fast Marching Distance Maps for Semi-automatic Layout Annotation

[ORAL PRESENTATION]

[ORAL PRESENTATION]

International Institute of Information Technology, Hyderabad

International Conference on Document Analysis and Recognition (ICDAR 2021)

International Conference on Document Analysis and Recognition (ICDAR 2021)

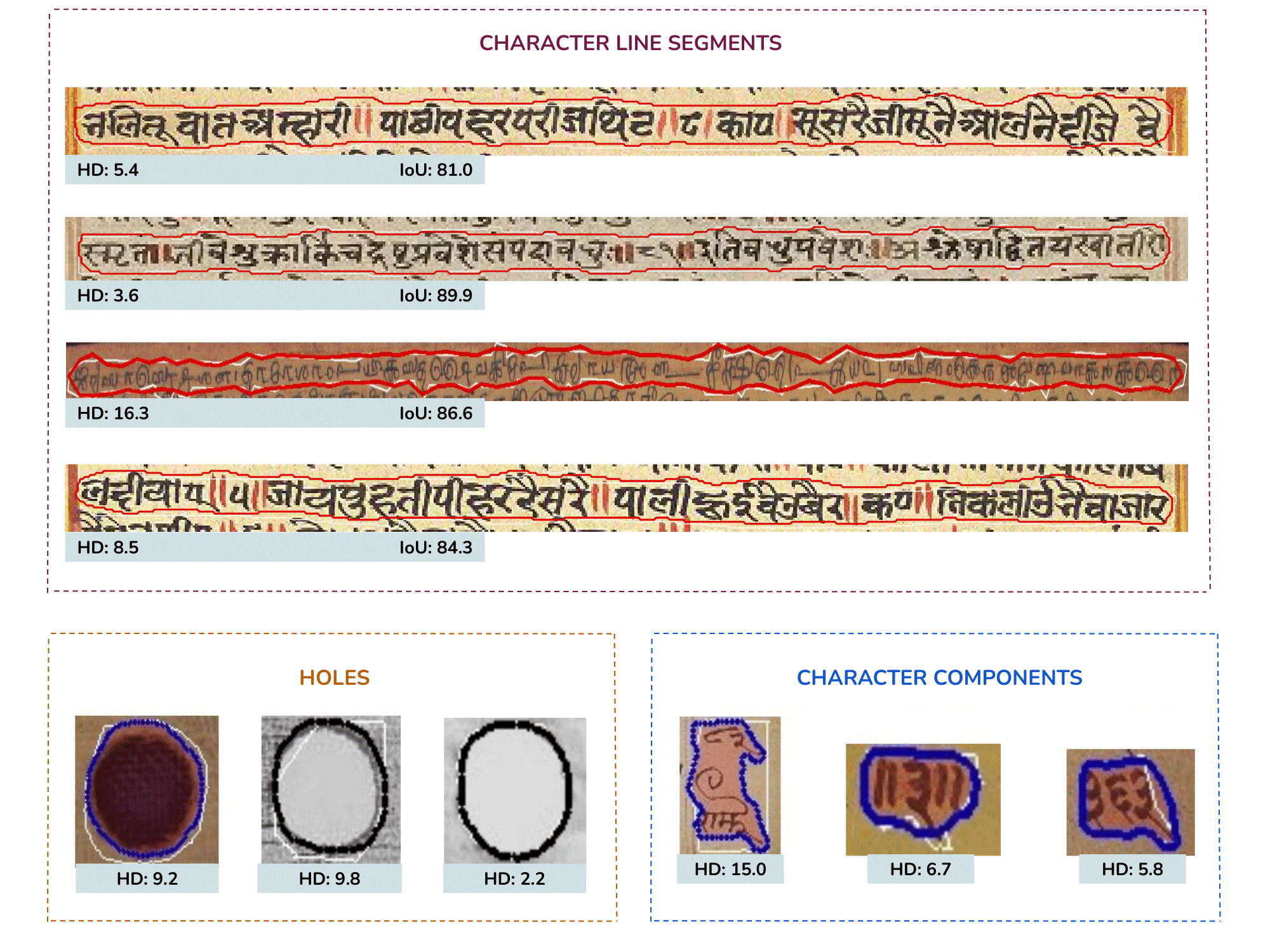

Explore BoundaryNet results with our interactive dashboard - Click [here]

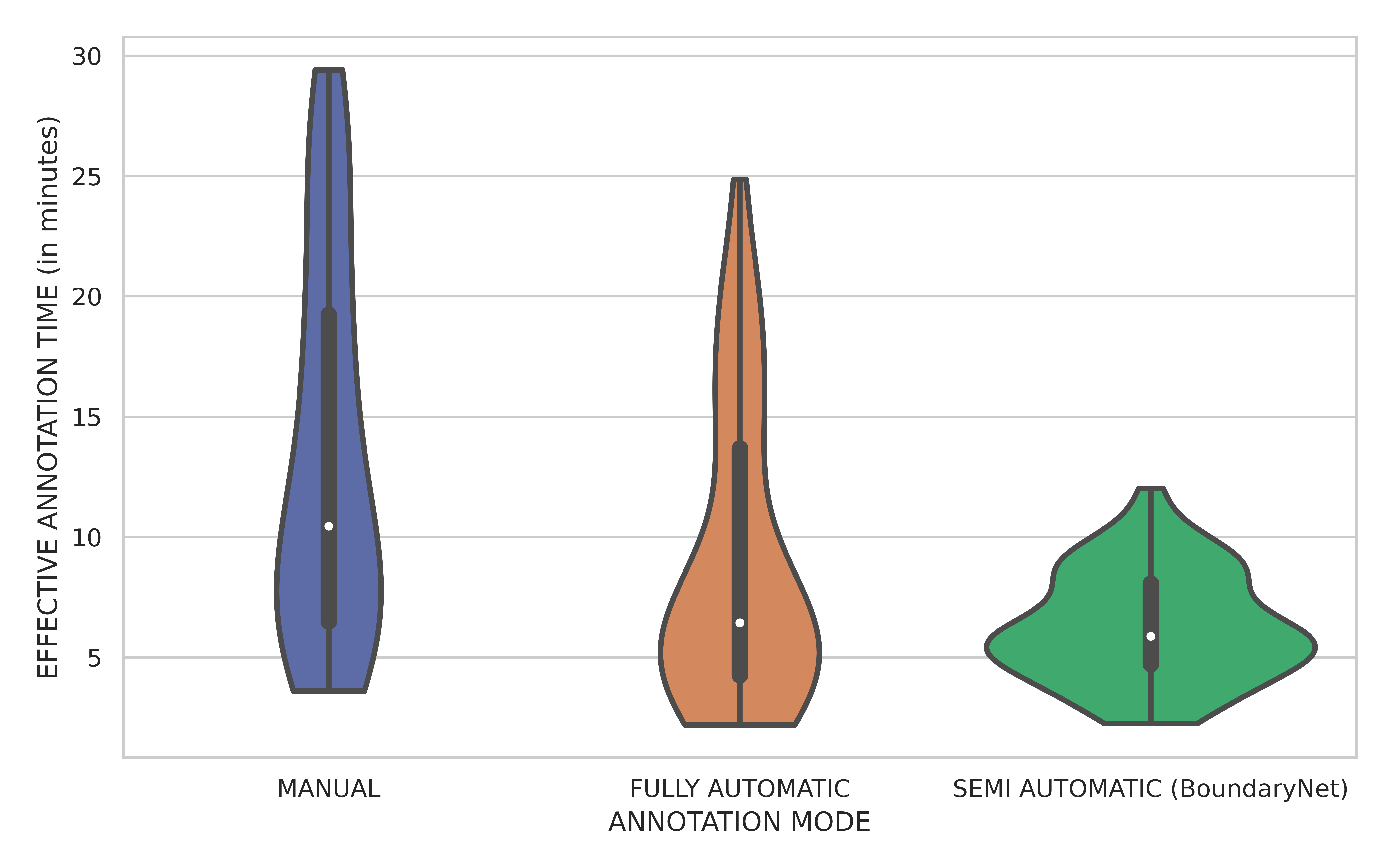

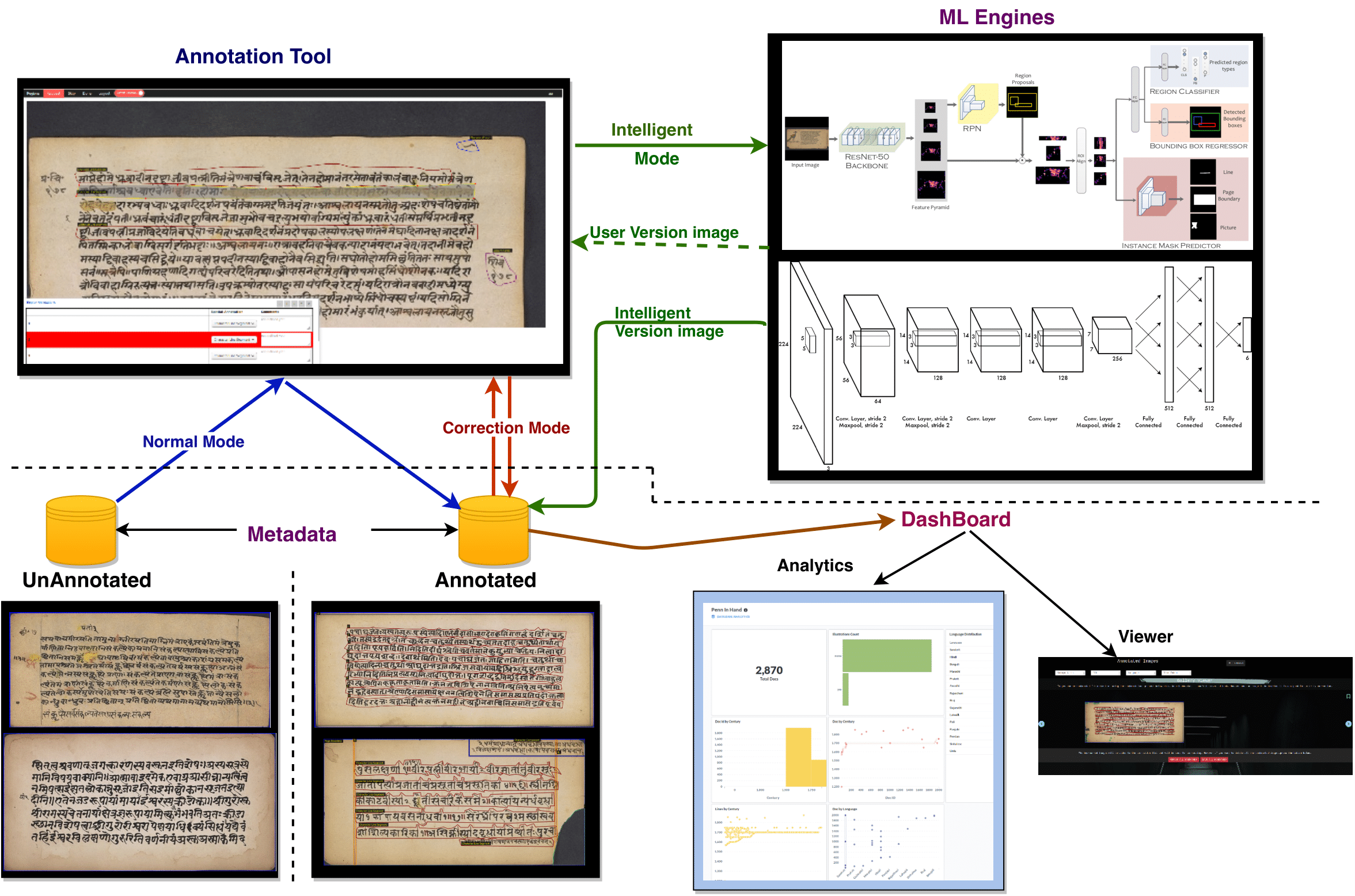

Comment: Features an intuitive Annotation GUI, a graphical analytics dashboard and interfaces with machine-learning based intelligent modules for Historical Documents Annotation.